Usage limits for Deno Deploy is confusing

I typically prefer self-hosting my websites for greater control and flexibility. However, for straightforward projects like simple landing pages, often featuring mostly static content with occasional dynamic elements, such as markdown-based blog posts I turn to Deno Deploy. It's a convenient option for quick deployments without the overhead of managing a couple of extra configurations on my server.

Currently, I'm developing a software project that's still in progress. To build early momentum, I decided to launch a basic landing page. The goal is to start accumulating traffic and SEO value, especially since I plan to commercialize the software down the line if things go smoothly.

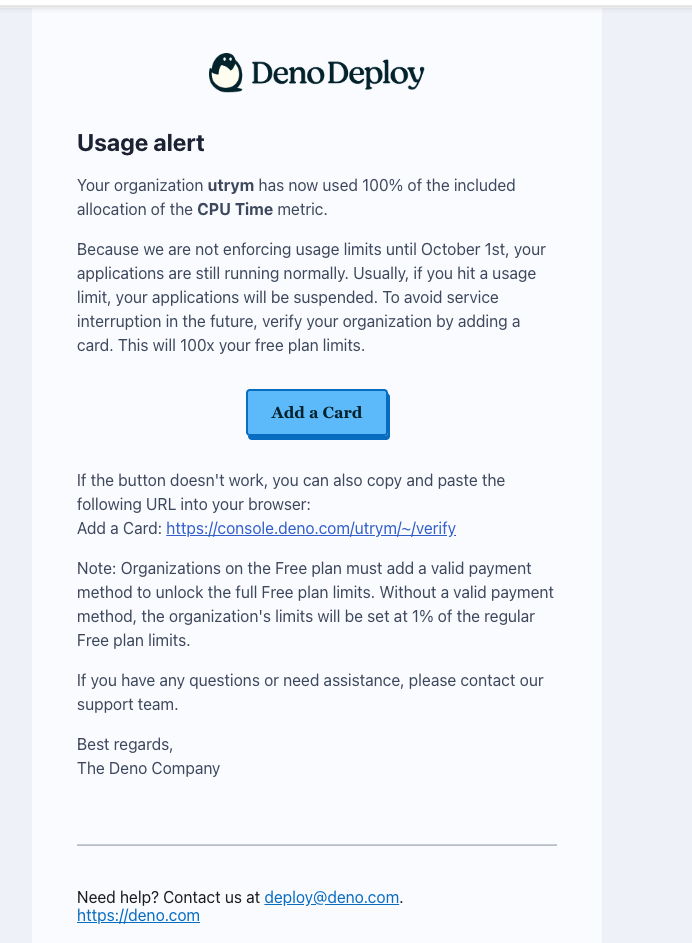

That said, I was caught off guard today when I received this email from Deno Deploy:

It warned of approaching usage limits on my account. This seemed odd for a temporary landing page with minimal content and no real functionality yet. Intrigued, I dug deeper, and what I uncovered was even more perplexing.

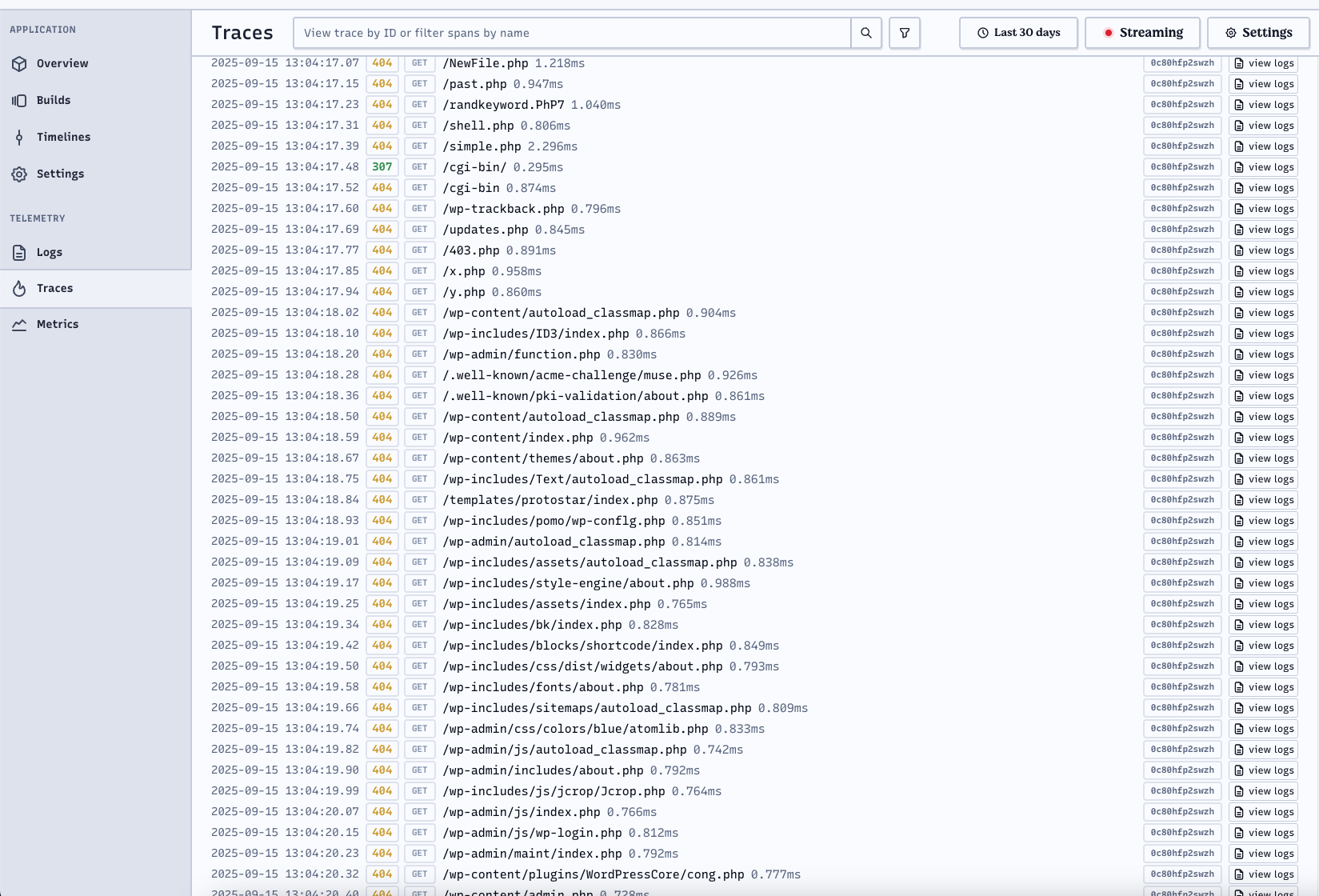

As the logs show, the majority of the traffic consists of automated bots scanning for common vulnerabilities. Requests for PHP files, for instance, make no sense on my site since I don't use PHP at all. I recognize that this kind of probing is commonplace on the web, but it raises a key question: Does this bot activity count toward my CPU usage limits?

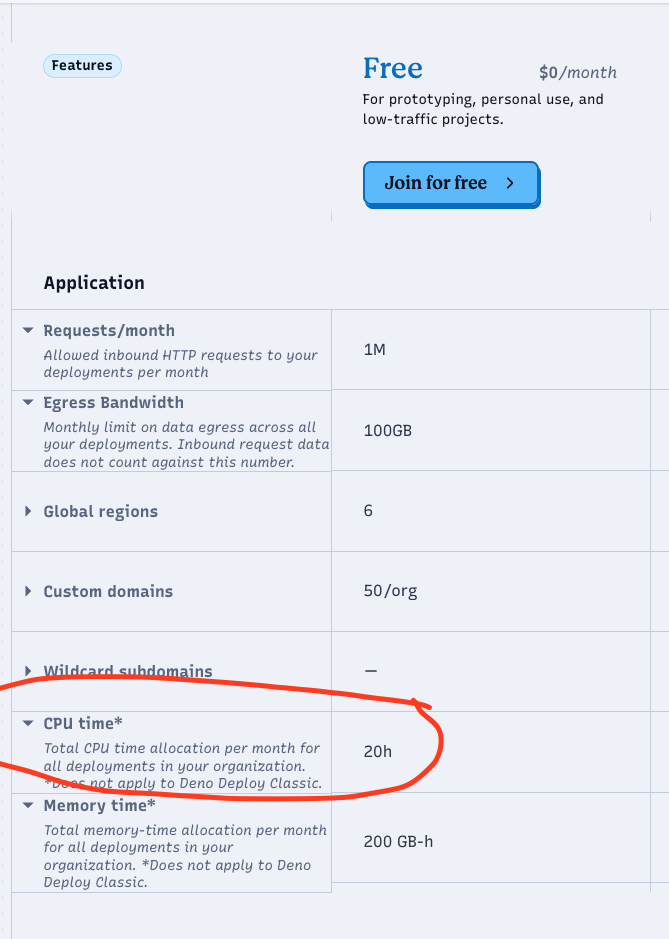

The email didn't clarify which limits applied to my specific project, so I headed to Deno Deploy's pricing page for details.

According to the documentation, the free tier includes up to 20 hours of CPU time. That sounds generous, but when I checked my actual metrics, the total CPU usage over the last 30 days was just 3326 milliseconds, equivalent to about 0.00092 hours. I have only one other project in their playground, which sees even less activity. So, how on earth am I approaching any limits?

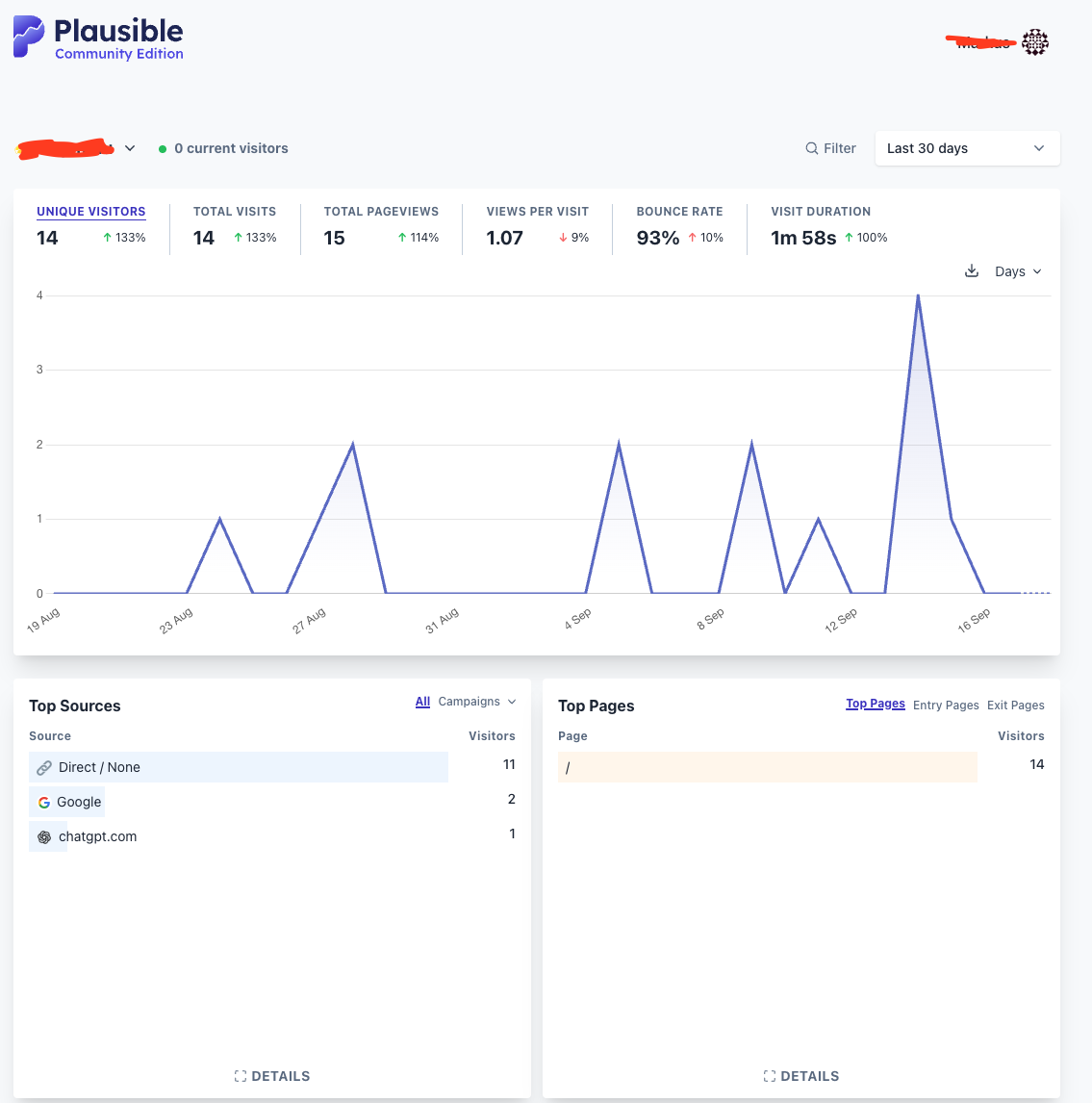

My Plausible analytics confirm this: Over the past 30 days, I've had roughly 14 genuine visitors. Real traffic is negligible, which makes the usage alert all the more baffling.

If this pattern persists, I may need to migrate my projects elsewhere to avoid any enforced shutdowns. That said, I feel there's a notable lack of transparency in how Deno Deploy calculates these limits. It would be immensely helpful if their alerts included specifics—like exactly how the limit was reached, the calculation method, and perhaps breakdowns by project or traffic type.

I'm a fan of Deno overall and genuinely hope it succeeds as a platform. But building trust requires clear communication, especially around resource usage. If they address this, it could make a big difference for users like me relying on it for lightweight deployments. Has anyone else run into similar issues? I'd love to hear your thoughts.